Previously, we talked about how AI can help physicians with burnout by allowing strategic task streamlining, enhanced decision-making, and improved overall efficiency. When used strategically, AI can increase our sense of agency and human connection that we need to combat physician burnout.

However, AI is not a panacea, and in this episode, I’ll dig into why, and how Health Architects deal with these challenges to keep AI in their favor to steer clear of burnout. The upshot is that we need to treat AI with respect, caution, restraint, and a lot of cross-checking.

The Dark Side of the AI Force

Though its appeal is magnetic, and its power and precision are rapidly growing, AI has distinct limitations, some of which I have faced personally. I will share some of these before giving a global summary of all the caveats.

First, we can only go so far with algorithmic predictions since all predictions are just probability-based guesses. It’s important to remember that as an inductive science, medicine can never be 100% certain, so even if there’s a 99% likelihood of an algorithm giving the correct answer, there is a non-zero chance that it is completely off (in truth, many AI algorithms aim for an 85% ROC (Receiver Operating Curves – which is a measure of the strength of the prediction) as a benchmark, with 100% being a perfect prediction. Without trying to be dramatic, that 15% delta in medicine could mean the difference between life and death. For example, following an algorithm that says that sepsis isn’t present (when in fact it is) could lead to our unwittingly withholding life-saving antibiotics or fluids. Missing a pulmonary embolus, heart attack, or stroke could be equally fatal. As a result, the practicing physician and Health Architect must always ask oneself two familiar questions: How much risk am I willing to take? How bad are the consequences if I am wrong?

In life outside the hospital, we make decisions all the time using our powers of prediction, whether it is knowing what to wear based on the weather forecast or deciding how fast to drive based on traffic reports. Constantly making predictions based on available – but always limited – data is taxing to our brains, which is why our minds develop mental shortcuts – aka heuristics – to save brainpower.

The trouble with these shortcuts is that they’re often wrong. Sometimes—like in the movies—these misjudgments can be hilariously disastrous. I remember that scene from National Lampoon’s Vacation, where the teenage kids, Rusty and Audrey, are riding in the backseat of their family’s station wagon, headed to the famous Wally World. Between them, their grandmother Edna is sitting silent and peacefully motionless. At first, everything seems fine—until she starts slumping. First onto Audrey, and then onto Rusty, as the annoyed kids shove her back and forth, convinced their grandmother is in a deep sleep. It’s only when their mom looks back from the front seat that the horrifying truth hits all of them at once: Grandma Edna isn’t asleep—she’s dead! Oops. (I know, I’m dating myself here with this reference. But it’s such a great scene. And it’s not a one-off – just think about Weekend at Bernie’s or Monty Python and the Holy Grail for just two other examples of how intuitive predictions of life and death can be played with for maximum comedic effect).

Well, that’s the movies. Returning to medicine, it’s far less amusing when a patient receives the wrong diagnosis—or misses the right treatment at the right time—because our predictions fall apart. The takeaway is simple: AI can be a powerful tool for forecasting, but its predictions should never be accepted blindly since they can create a dangerous illusion of certainty.

Let’s move on to other limitations of AI. Even in medical disciplines like dermatology that tout more robust AI predictions, AI may lead us astray. I found this out first-hand several months ago when my six-old developed a rash – something that not only made her uncomfortable but also made her father feel like a pediatric dimwit.

So what did I do? I turned to UpToDate first for help and found a few leads like contact dermatitis, eczema, and atopic dermatitis. But none seemed to really fit the situation. So I turned to ChatGPT, to see if my luck would change. I uploaded the following a deidentified image and asked it for a diagnosis in a child:

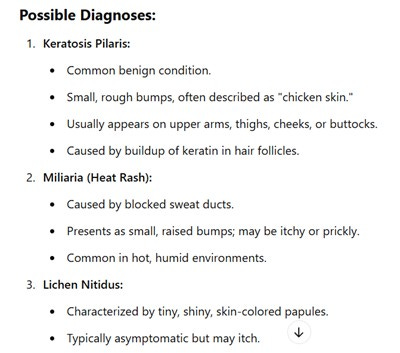

Here's what ChatGPT replied:

Other options listed included: folliculitis, scabies, pityriasis alba, and contact dermatitis.

In total, there were 6 options listed. I looked at them and scratched my head. Which one was the culprit?

Exasperated, I asked a dermatologist friend to help me, texting her the pics and giving her the same details. She immediately replied with “post-viral urticaria” – a diagnosis that never showed up in my ChatGPT list. She told me to give an antihistamine. Within 15 minutes, the rash had disappeared.

I know what you are thinking (especially those of you who are well-versed in generative AI platforms): I didn’t prompt my AI assistant correctly. With the right inputs, ChatGPT wouldn’t have answered so clumsily. There is truth to this assertion. In fact, the art of crafting the right prompts has gained its own moniker: Prompt Engineering. With better prompts, the results are better.

But giving age specifics and other circumstantial and contextual information, however, doesn’t get you totally out of the woods with regard to AI. For example, when I added several additional details like age, location, and nighttime symptoms, ChatGPT homed in on scabies as the only possible diagnosis. Huh? I came away with a distinct feeling of Caveat Emptor. Besides, I wanted to spend more time thinking about the medicine, not spend more time learning how to prompt the AI assistant better. Oy.

My third realization about AI’s limits came when I learned just how often it “hallucinates”—a term used to describe when AI confidently generates information that’s completely made up. A physician friend of mine experienced this firsthand when using ChatGPT-4.0 to help find references for a research paper. At first, she was impressed by the list it produced. But on closer inspection, one citation stood out. It looked plausible, even scholarly—but when she tried to verify it separately, her suspicions were confirmed: the reference didn’t exist. The AI had completely fabricated it based on what should be true, not what was true. In fact, I’ve run into the same problem in my own searches. For every five or six accurate citations, there’s often one that’s either misleading or entirely fictional.

Fourth, AI has been shown to make us dumber. How? By using generative AI tools, we become less creative and less critical thinkers. AI can serve up everything from sophisticated graphic designs to language translations in seconds, but it does so at the expense of our creative capacity, which atrophies without attention and practice. Also, critical thinking skills suffer: a recent study conducted by Microsoft of its workers found that increased confidence in ChatGPT was associated with reduced critical thinking (on the other hand, they found that higher self-confidence was associated with higher critical thinking). So there are longer-term cognitive tradeoffs to consider when yielding to the power of AI.

Finally, though AI is computer-driven, AI algorithms are not immune from bias. One salient example of where things can go wrong is one study that showed that a commercial healthcare risk algorithm claimed that black people had the same overall risk as white people, even though the black people were clinically sicker (since the algorithm was based on healthcare costs, not actual acuity). Therefore, false conclusions are derived from biased data sources.

But AI bias is not just due to using the wrong data sources. The models upon which the algorithms are trained are often refined with human input (in a process called Reinforcement Learning by Human Feedback), making human biases potentially baked into the AI pie.

In addition to the accuracy and bias issues I have already highlighted, AI has additional caveats to keep in mind:

● Lack of True Clinical Judgment: AI mimics but lacks true human clinical reasoning and the ability to consider context, nuance, and patient-specific factors, which form the essence of trust-building – which physicians rely upon for human connection to patients and families.

● Legal and Ethical Risks: Reliance on AI-generated content could expose physicians to liability (e.g. HIPAA violations) if the information is inaccurate or harms a patient, either knowingly or unwittingly. Using common sense, some sagely advise against sharing several things with your AI assistant, including personal identity information (e.g social security numbers, bank account or other financial information, etc.), medical results, proprietary corporate secrets or other intellectual property, and login information, since most LLM services cannot assure confidentiality to you alone.

● Unverifiable Sources: AI often does not (and cannot) disclose the sources of its information, making it challenging to verify the reliability of its output.

● Inability to Provide Emotional Support: AI may appear intelligent in many ways, but one way it is not is emotionally intelligent. True empathy is a uniquely human ability and is necessary for effective communication and relationship-building not just with patients but with other healthcare professionals as well.

● Regulatory and Credentialing Issues: Most healthcare systems and governing bodies are still unsure about how to place appropriate guardrails on AI and in general have not approved the use of generative AI in clinical decision-making.

● Complex Patient Cases: Since AI draws from mega data repositories, it is more likely to reflect common (and simpler) manifestations of disease rather than rarer (and more complex) ones. Said differently, AI focuses on finding pigeons over zebras.

So what’s the conclusion, keeping all these limitations in mind? In brief, for us to trust AI, it depends. It depends on the field in which it is used (since accuracy varies from specialty to specialty), it depends on your tolerance to being completely wrong, it depends on the prompts, it depends on the possibility of hallucination, it depends on how much you want to retain creativity and critical thinking skills, it depends on what data inputs are used or how human bias is embedded in the design, it depends on what regulatory bodies, legal authorities, and privacy hawks come up with as AI matures.

In a conservative field like medicine, risk-taking is not a virtue. This is why many still hesitate when listening to AI recommendations, like this JAMA article that showed that AI-based prognostications in heart failure patients, even when largely accurate, were roundly ignored by physicians.

Calibrating the Burnout Algorithm

Now, how can the Health Architect work within the limitations of AI as they mitigate burnout? The short answer: cautious optimism marked by thoughtful experimentation, healthy restraint, and frequent cross-checking.

Remember that some parts of our physician workflow lend themselves to incorporating AI more easily than others. For example, we can use AI tools to expand differential diagnoses after we have come up with our own (i.e. to make sure we didn’t overlook something), or we can use AI-based scribes to cut down on documentation time (since we can check our work in real time). When used on its own in these ways, the narrower the AI application, the better – at least for now. Stick to specific, well-defined tasks that you can easily define and whose accuracy you can verify. Health Architects know that such AI applications can still enhance their sense of agency while keeping AI limitations at bay.

On the other hand, Health Architects use AI applications like predictive models and clinical decision support tools to mitigate burnout in a different way. They use them to generate prognoses and treatment options that can then be used to spur conversations and enhance dialogue with other team members and patients – and this includes both clinicians and administrators as far as health professionals go. Sharing recommendations and predictions can be a creative catalyst for collaborative decision-making. Remember that medicine, like many other human activities, often feels better when you are working together with others, rather than in isolation.

Take-Home Point: AI can be a powerful tool to ease physician burnout through automation, efficiency, and diagnostic support—but it is not a cure-all. Its predictions can be flawed, biased, or outright fabricated. To use AI wisely in medicine, we must pair cautious optimism with rigorous cross-checking, sound clinical judgment, and an unwavering commitment to human connection. Trust in AI should be earned—not assumed.

Ok, I hope you enjoyed this episode. In upcoming episodes, I’ll address some common myths about burnout before diving back into more elements of the foundation of our building of health and wellness – including a focus on medical ethics. See you then.

Share this post